There’s a powerful engine revving quietly under the hood of AI adoption: running LLMs locally. Whether you’re a founder, systems architect, or digital strategist, the decision to bring models in-house unlocks remarkable advantages.

🔐 1. Privacy, Security & Data Sovereignty

Keeping your LLM on-premises means no sensitive data leaks to external servers—your patient records, legal docs, account data stay under your full control (Medium, clavistechnologies.com). This supports compliance with GDPR, HIPAA, and other regulations (clavistechnologies.com).

Enterprise-grade audit logs and encryption reinforce this wall of protection .

⚡ 2. Reduced Latency & Real‑Time Performance

Local models eliminate network hops, enabling near-instant responses—critical for chatbots, voice assistants, or mission-critical edge apps (athensintech.com).

💰 3. Cost Efficiency Over Time

Cloud LLMs can levy steep usage fees per token or API call. A local deployment has upfront hardware costs, but predictable, potentially lower long-term costs, especially with high throughput use cases (athensintech.com).

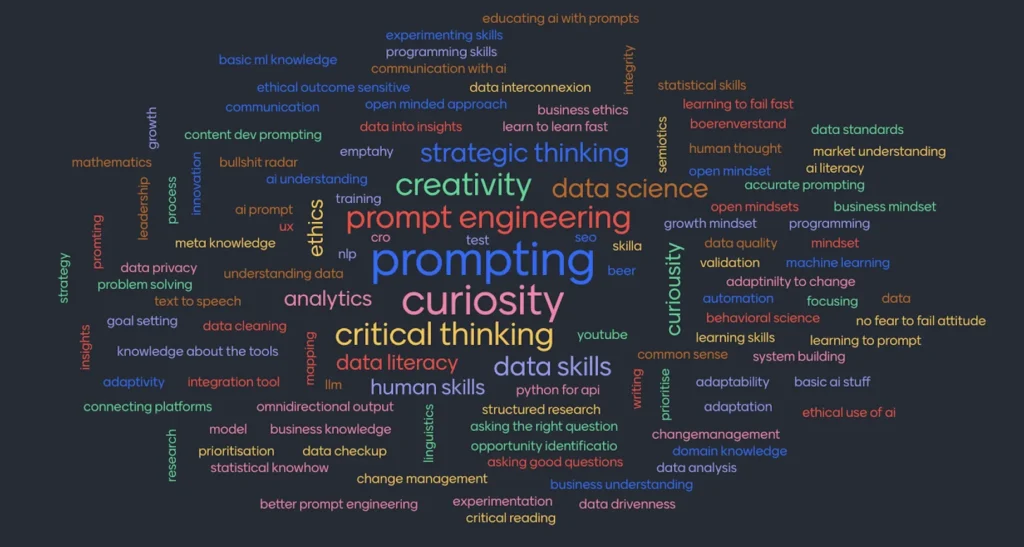

🛠️ 4. Customization & Fine‑Tuning

Local LLMs let you fine-tune on domain-specific data, embedding proprietary knowledge in the model—vital for legal, medical, or technical language (clavistechnologies.com).

🌍 5. Independence & Reliability

No dependence on third-party APIs—no surprise downtime, rate limits, or policy changes (athensintech.com). Keep working even when internet or cloud services are down.

🧭 6. Integration & Compliance Control

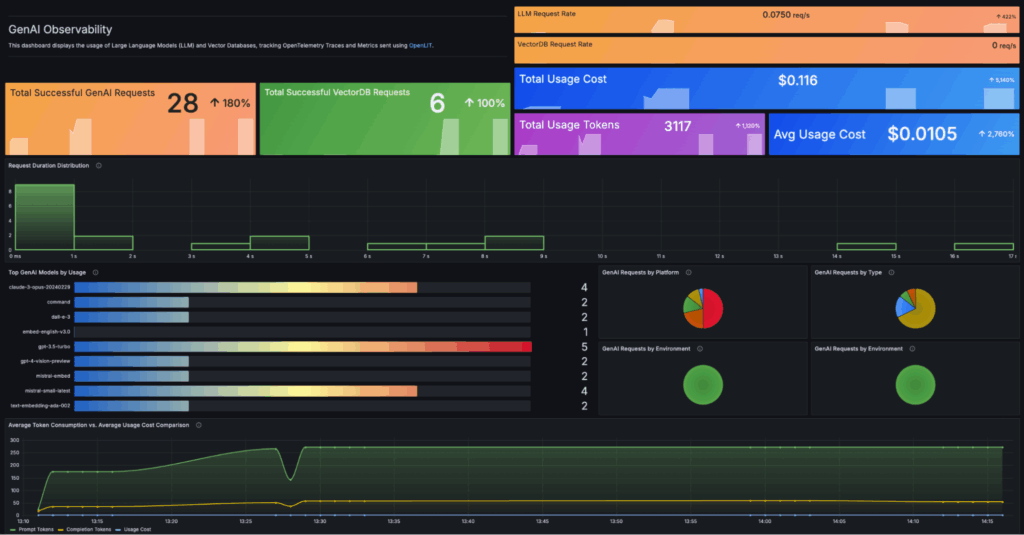

Running locally lets you tailor infrastructure to strict regulatory benchmarks, full observability, and tie model usage into CI/CD pipelines (DataCamp, clavistechnologies.com).

📈 7. Scalability & Edge Deployment

Smaller, specialized models (like “SLMs”) can run even on edge devices—sidestepping cloud costs and bringing AI to IoT or mobile apps (TechRadar).

🧩 8. Intellectual Property & Innovation

You own the model weights and data pipelines—enable proprietary innovation and prevent IP drift (clavistechnologies.com).

🧾 Summary Table of Local LLM Benefits

| Benefit | Why It Matters |

|---|---|

| Privacy & Data Control | Keeps sensitive data fully internal |

| Low Latency | Real-time responses for mission-critical services |

| Cost Efficiency | Fixed costs beat expensive API usage |

| Customization | Tailored language models for your domain |

| Reliability & Independence | No outages due to external services |

| Compliance & Auditability | Full control for regulatory needs |

| Scalability & Edge AI | Works even without cloud connectivity |

| IP Protection | Strengthens proprietary advantage |

Final Take

Local LLMs enable powerful, compliant, and cost-effective AI—driving faster responses, total data control, domain-specific accuracy, and long-term ROI. The initial hardware and setup effort pay off with strategic autonomy, innovation, and resilience.